Today, on Telerik's blog, Bradley Braithwaite published his post "Top 5 TDD Mistakes". I like to comment on "top N mistakes"-kind of posts, so read the Bradley's post first and let me add my three cents about this one, too.

I'm pretty satisfied to read someone who is passionate and pragmatic about Test-Driven Development in the same time. Let's just quote two important sentences from Bradley's article I agree with totally:

- It's better to have no unit tests than to have unit tests done badly.

- Unit Tests make me more productive and my code more reliable.

Let me comment on each of the mistake mentioned in the article.

Not using a Mocking Framework vs. Too Much Test Setup

One may say that these two are a bit contradictory as using a mocking framework may lead to too much test setup. This is of course a matter of good balance we need to find and maintain.

If we're testing a code that has some dependencies and not using mocking framework, we're not doing unit testing in its pure form, for sure. But let's take my favourite example of testing the code that talks with the database - is there really anyting better than testing against the real database, run in-memory? Call it integration test, half-arsed test or whatever, that's the only reliable method of testing the queries itself.

But in most cases, sure, let's use stubs, but avoid mocks - the difference is very important. Mocks as a behaviour testing tool are often overused to check if the implementation is exactly as defined in the test, written by the same person in the same time - it's like checking if the design we've just intentionally created was really intentional. Let's focus on verifying the actual outcome of the method (in which stubs may help a lot) instead of coupling to the implementation details.

And, agreed, there's nothing worse to maintain than a test suite with those spaghetti-flavoured mock setups.

Asserting Too Many Elements

Agreed. There's a good rule of having single logical assert per test, even if it physically means several Assert calls. And for me having any asserts at all is a rule of equal importance.

Writing Tests Retrospectively

Agreed again, the most of TDD's gain is from having to think about the implementation before it comes to existence. It can make developer think not only about happy paths, but also about negative cases and boundary values. It also strongly supports KISS and YAGNI rules which are vital for long-living codebases.

Also, I like using TDD to cope with reported bugs. Writing failing unit (or - again - probably more integration-like) test by recreating the failure's conditions makes analysis easier, helps isolating the root cause of the failure and is often much easier than reproducing the bug in real-life scenario. Retrospectively written test for a bug is only for regression testing.

Testing Too Much Code

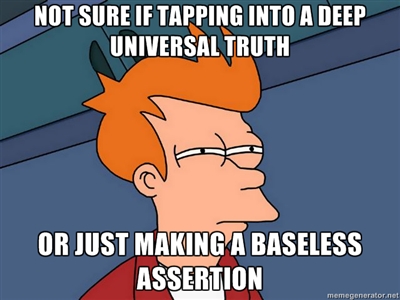

Not an universal truth, but I feel quite the same. I see a lot of value in unit-testing my workhorse parts of code, I do create it more-less according to the TDD principles (especially having no production code without failing test).

But I don't think having 100% code coverage as an ultimate goal makes any sense. I think there's always quite a lot of code that is just not suitable for valuable unit testing, i.e. coordinating/organizing kind of code (let's call it composition nodes as a reference to composition root), that just takes some dependencies, calls several methods and pass things from here to there, not adding any logic and not really interfering with the data. Tests written for that kind of code are often much more complicated than the code itself, due to heavy mocks and stubs utilization. Bradley's rule of thumb is what works for me, too: write a separate test for every IF, And, Or, Case, For and While condition within a method. I'd consider the code to be fully covered when just all that branch/conditional statements are covered.